For quite a few years I have found the concept of microfoundations to be central for thinking about relationships across levels of social and individual activity. Succinctly, I have argued that, while it is perfectly legitimate to formulate theories and hypotheses about the properties and causal powers of higher-level social entities, it is necessary that those entities should have

microfoundations at the level of the structured activities of socially situated individuals. Higher-level social things need microfoundations at the level of the individuals whose actions and thoughts create the social entity or power. (I have also used the idea of "methodological localism" to express this idea;

link.) A fresh look at the presuppositions of the concept makes me more doubtful about its validity, however.

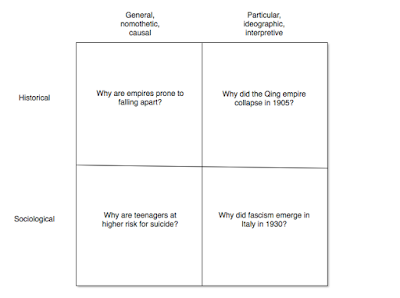

This concept potentially plays two different roles within the philosophy of social science. It might serve as a

methodological requirement about the nature of social explanation: explanations of social phenomena need to take the form of detailed accounts of the pathways that bring them about at the level of individual socially situated situated actors. Second, it might be understood as an

ontological requirement about acceptable social constructs; higher-level social constructs must be such that it is credible that they are constituted by patterns of individual-level activity. Neither is straightforward.

Part of the appeal of the concept of microfoundations derived from a very simple and logical way of understanding certain kinds of social explanation. This was the idea that slightly mysterious claims about macro-level phenomena (holistic claims) can often be given very clear explanations at the micro-level. Marx’s claim that capitalism is prone to crises arising from a tendency for the rate of profit to fall is a good example. Marx himself specifies the incentives facing the capitalist that lead him or her to make investment decisions aimed at increasing profits; he shows that these incentives lead to a substitution of fixed capital for variable capital (machines for labor); profits are created by labor; so the ratio of profit to total capital investment will tend to fall. This is a microfoundational explanation, in that it demonstrates the individual-level decision making and action that lead to the macro-level result.

There is another reason why the microfoundations idea was appealing — the ontological discipline it imposed with respect to theories and hypotheses at the higher level of social structure and causation. The requirement of providing microfoundations was an antidote to lazy thinking in the realm of social theory. Elster’s critique of G. A. Cohen’s functionalism in

Karl Marx's Theory of History is a case in point; Elster argued convincingly that a claim that "X exists because it brings about Y benefits for the system in which it exists” can only be supported if we can demonstrate the lower-level causal processes that allow the prospect of future system benefits to influence X (

link). Careless functionalism is unsupportable. More generally, the idea that there are social properties that are fundamental and emergent is flawed in the same way that vitalist biology is flawed. Biological facts are embedded within the material biochemistry of the cell and the gene, and claims that postulate a “something extra” over and above biochemistry involve magical thinking. Likewise, social facts are somehow or other embedded within and created by a substratum of individual action.

In short, there are reasons to find the microfoundations approach appealing. However, I'm inclined to think that it is less compelling than it appears to be.

First, methodology. The microfoundations approach is a perfectly legitimate explanatory strategy; but it is only one approach out of many. So searching for microfoundations ought to be considered an explanatory heuristic rather than a methodological necessity. Microfoundational accounts represent one legitimate form of social explanation (micro-to-meso); but so do "lateral" accounts (meso-to-meso explanations) or even "descending" accounts (macro-to-meso explanations). So a search for microfoundations is only one among a number of valid explanatory approaches we might take. Analytical sociology is one legitimate approach to social research; but there are other legitimate approaches as well (

link).

Second, social ontology. The insistence that social facts must rest upon microfoundations is one way of expressing the idea of ontological dependency of the social upon the individual level (understanding, of course, that individuals themselves have social properties and constraints). But perhaps there are other and more compelling ways of expressing this idea. One is the idea of

ontological individualism. This is the view that social entities, powers, and conditions are all constituted by the actions and thoughts of individual human beings, and nothing else. The social world is constituted by the socially situated individuals who make it up. Brian Epstein articulates this requirement very clearly here: "Ontological individualism is the thesis that facts about individuals exhaustively determine social facts” (

link). This formulation makes it evident that individualism and microfoundations are closely linked. In particular, ontological individualism is true if and only if all social facts possess microfoundations at the level of socially situated individuals.

The microfoundations approach seems to suggest a coherent and strong position about the nature of the social world and the nature of social explanation; call this the "strong theory" of microfoundations:

- There are discernible and real differences in level in various domains, including the domain of the social.

- Higher-level entities depend on the properties and powers of lower-level constituents and nothing else.

- The microfoundations of a higher-level thing are the particular arrangements and actions of the lower-level constituents that bring about the properties of the higher-level thing.

- The gold standard for an explanation for a higher-level fact is a specification of the microfoundations of the thing.

- At the very least we need to be confident that microfoundations exist for the higher-level thing.

- There are no "holistic" or non-reducible social entities.

- There is no lateral or downward social causation.

Taken together, this position amounts to a fairly specific and narrow view of the social world -- indeed, excessively so. It fully incorporates the assumptions of ontological individualism, it postulates that generative microfoundational explanations are the best kind of social explanation, and it rules out several other credible lines of thought about social causation.

In fact, we might want to be agnostic about ontological individualism and the strong theory of microfoundations for a couple of reasons. One is the possibility of downward and lateral causation from meso or macro level to meso level. Another is the possibility raised by Searle and Epstein that there may be social facts that cannot be disaggregated onto facts about individuals (the validity of a marriage, for example;

link). A third is the difficult question of whether there might be reasons for thinking that a lower level of organization (e.g. the cognitive system or neurophysiology) is more compelling than a folk theory of individual behavior. Finally, the metaphor of levels and strata itself may be misleading or incoherent as a way of understanding the realm of the social; it may turn out to be impossible to draw clear distinctions between levels of the social. (This is the rationale for the idea of a "flat" social ontology;

link.) So there seem to be a handful of important reasons for thinking that we may want to suspend judgment about the correctness of ontological individualism.

Either way, the microfoundations thesis seems to be questionable. If ontological individualism is true, then it follows trivially that there are microfoundations for a given social fact. If ontological individualism is false, then the microfoundations thesis as an ontological thesis is false as well -- there will be social properties that lack microfoundations at the individual level. Either way, the key question is the truth or falsity of ontological individualism.

Two things now seem more clear to me than they did some years ago. First, microfoundationalism is not a general requirement on social explanation. It is rather one explanatory strategy out of many. And second, microfoundationalism is not necessarily the best way of articulating the ontology of the social world. A more direct approach is to simply specify that the social world is constituted by the activities and thoughts of individuals and the artifacts that they create. The principle of ontological individualism seems to express this view very well. And when the view is formulated clearly, its possible deficiencies become clear as well. So I'm now inclined to think that the idea of microfoundations is less useful than it once appeared to be. This doesn't mean that the microfoundations concept is incoherent or misleading; but it does mean that it does not contribute to social-science imperatives, either of methodology or ontology.